| 일 | 월 | 화 | 수 | 목 | 금 | 토 |

|---|---|---|---|---|---|---|

| 1 | 2 | 3 | 4 | 5 | 6 | 7 |

| 8 | 9 | 10 | 11 | 12 | 13 | 14 |

| 15 | 16 | 17 | 18 | 19 | 20 | 21 |

| 22 | 23 | 24 | 25 | 26 | 27 | 28 |

- Python

- 315. Count of Smaller Numbers After Self

- t1

- Class

- 밴픽

- Generator

- 715. Range Module

- Substring with Concatenation of All Words

- 프로그래머스

- Protocol

- Decorator

- shiba

- iterator

- 30. Substring with Concatenation of All Words

- data science

- 운영체제

- 시바견

- Regular Expression

- kaggle

- Python Implementation

- 109. Convert Sorted List to Binary Search Tree

- DWG

- 컴퓨터의 구조

- LeetCode

- concurrency

- Python Code

- attribute

- Convert Sorted List to Binary Search Tree

- 파이썬

- 43. Multiply Strings

- Today

- Total

Scribbling

[System Design] Rate Limiter 본문

In a network system, a rate limiter controls the traffic rate that a client or a service sends. Most APIS have rate limiters in any form. Rate limiter 1) prevents DOS (Denial of Service); 2) reduces costs; and 3) prevents server overloads.

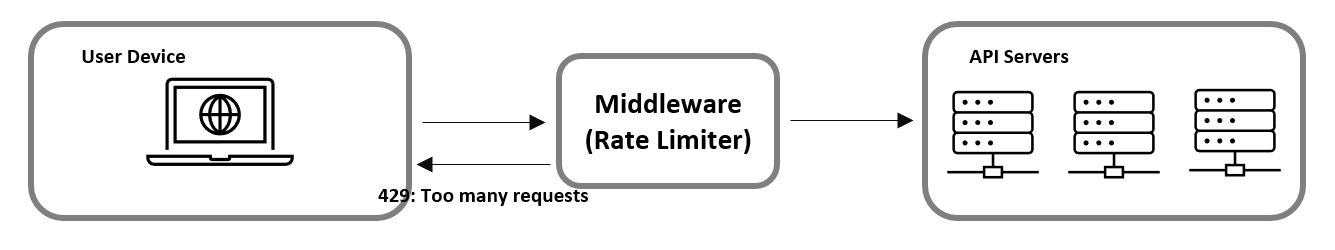

Where should the rate limiter be placed?

- It can be placed on the server side.

- We may put a separate middleware layer for the rate limiter.

In the cloud microservice architecture, a rate limiter is usually implemented by an API gateway component. API gateway is a fully managed service that handles rate limiter, SSL termination, authentification, IP whitelist, etc.

Rate-limiting algorithms

1) Token-bucket

A token bucket is a container with a fixed number of tokens. Tokens are provided to the bucket in a periodic manner. A token is used up when a request is handled. If the bucket runs out of tokens, the request is dropped.

This algorithm has two parameters.

- the size of the bucket

- token refill rate

Generally, a separate bucket exists for each API endpoint.

The token bucket algorithm is very intuitive and easy to implement. However, tuning the parameters may be tough.

2) Leaky bucket

The leaky bucket algorithm has a queue (or a bucket) of requests. A request is dropped when the queue is full. This design allows for a stable outflow rate. However, the latest request may be dropped in heavy traffic.

3) Window algorithms

- fixed window counter

- sliding window log

- sliding window counter

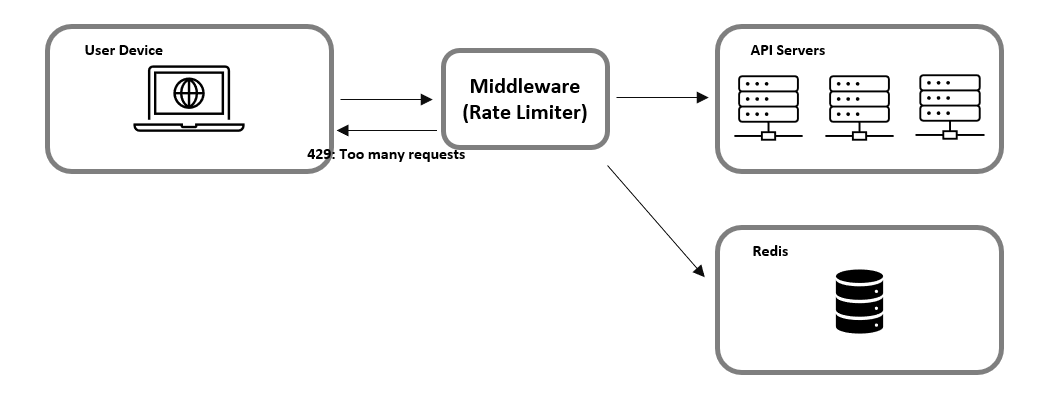

Architecture

The basic idea of the rate limiter is very simple. We just need counters to count the number of requests. Where should these counters be placed? The database is not a good option because of the latency. Generally, Redis is a good alternative.

A client sends a request to the middleware. The rate limiter checks if the client has reached the request limit from Redis. If not, the request is delivered to the API server.

In case of throttling, requests may be either dropped or delivered to a message queue system. The latter option allows the requests to be handled in the future.

'Computer Science > Computer Knowledge' 카테고리의 다른 글

| [CS Interview Tips] (0) | 2023.05.09 |

|---|---|

| [System Design] Scaling Web Apps (0) | 2023.04.27 |

| Docker Basics (0) | 2022.10.05 |

| 운영체제 - 12 (0) | 2021.11.03 |

| 운영체제 - 11 (0) | 2021.11.02 |